A look at GNSS in DS-015

We’ve spent a lot of time now analyzing airspeed. For a simple float differential pressure it has caused a lot of discussion, but it is time to move onto the true horrors of DS-015. Nothing exemplifies those horrors more than the GNSS message. This will be a long post because it will dive into exactly what is proposed for GNSS, and that is extremely complex.

What it should be

This is the information we actually need from a CAN GNSS sensor:

uint3 instance

uint3 status

uint32 time_week_ms

uint16 time_week

int36 latitude

int36 longitude

float32 altitude

float32 ground_speed

float32 ground_course

float32 yaw

uint16 hdop

uint16 vdop

uint8 num_sats

float32 velocity[3]

float16 speed_accuracy

float16 horizontal_accuracy

float16 vertical_accuracy

float16 yaw_accuracy

It is 56 bytes long, and pretty easy to understand. Note that it includes yaw support, as yaw from GPS is becoming pretty common these days, and in fact is one of the key motivations for users buying the higher end GNSS modules. When a float value in the above isn’t known then a NaN would be used. For example if you don’t know vertical velocity as you have a NMEA sensor attached and those mostly don’t have vertical velocity then the 3rd element of velocity would be NaN. Same for the accuracies that you don’t get from the sensor.

I should note that the above structure is a big improvement over the one in UAVCAN v0, which requires a bunch of separate messages to achieve the same thing.

GNSS in DS-015

Now let’s look at what GNSS would be like using current DS-015. Following the idiom of UAVCAN v1 the GNSS message is a very deeply nested set of types. It took me well over an hour to work out what is actually in the message set for GNSS as the nesting goes so deep.

To give you a foretaste though, to get the same information as the 56 byte message above you need 243 bytes in DS-015, and even then it is missing some key information.

How does it manage to expand such a simple set of data to 243 bytes? Here are some highlights:

- there are 55 covariance floats. wow

- there are 6 timestamps. Some of the timestamps have timestamps on them! Some of the timestamps even have a variance on them.

I’m sure you must be skeptical by now, so I’ll go into it in detail. I’ll start from the top level and work down to the deepest part of the tree of types

Top Level

The top level of GNSS is this:

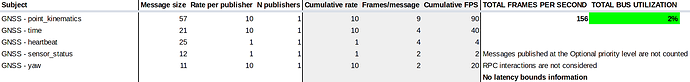

# point_kinematics reg.drone.physics.kinematics.geodetic.PointStateVarTs 1...100

# time reg.drone.service.gnss.Time 1...10

# heartbeat reg.drone.service.gnss.Heartbeat ~1

# sensor_status reg.drone.service.sensor.Status

the “1…100” is the range of update rates. This is where we hit the first snag. It presumes you’ll be sending the point_kinematics fast (typical would be 5Hz or 10Hz) and the other messages less often. The problem with this is it means you don’t get critical information that the autopilot needs on each update. So you could fuse data from the point_kinematics when the status of the sensor is saying “I have no lock”. The separation of the time from the kinematics also means you can’t do proper jitter correction for transport timing delays.

point_kinematics - 74 bytes

The first item in GNSS is point_kinematics. It is the following:

reg.drone.physics.kinematics.geodetic.PointStateVarTs:

74 bytes

uavcan.time.SynchronizedTimestamp.1.0 timestamp

PointStateVar.0.1 value

Breaking down the types we find:

uavcan.time.SynchronizedTimestamp.1.0:

7 bytes

uint56

Keep note of this SynchronizedTimestamp, we’re going to be seeing it a lot.

PointStateVar.0.1:

67 bytes

PointVar.0.1 position

reg.drone.physics.kinematics.translation.Velocity3Var.0.1 velocity

Looking into PointVar.0.1 we find:

PointVar.0.1 position:

36 bytes

Point.0.1 value

float16[6] covariance_urt

and there we have our first covariances. I’d guess most developers will just shrug their shoulders and fill in zero for those 6 float16 values. The chances that everyone treats them in a consistent and sane fashion is zero.

Ok, so now we need to parse Point.0.1:

Point.0.1:

24 bytes

float64 latitude # [radian]

float64 longitude # [radian]

uavcan.si.unit.length.WideScalar.1.0 altitude

there at last we have the latitude/longitude. Instead of 36 bits for UAVCAN v0 (which gives mm accuracy) we’re using float64, which allows us to get well below the atomic scale. Not a great use of bandwidth.

What about altitude? That is a WideScalar:

uavcan.si.unit.length.WideScalar.1.0:

8 bytes, float64

yep, another float64. So atomic scale vertically too.

Back up to our velocity variable (from PointStateVar.0.1) we see:

reg.drone.physics.kinematics.translation.Velocity3Var.0.1:

31 bytes

uavcan.si.sample.velocity.Vector3.1.0 value

float16[6] covariance_urt

so, another 6 covariance values. More confusion, more rubbish consuming the scant network resources.

Looking inside the actual velocity in the velocity we see:

uavcan.si.sample.velocity.Vector3.1.0:

19 bytes

uavcan.time.SynchronizedTimestamp.1.0

float32[3] velocity

there is our old friend SynchronizedTimestamp again, consuming another useless 7 bytes.

Now we get to the Time message in GNSS:

time: reg.drone.service.gnss.Time

21 bytes

reg.drone.physics.time.TAI64VarTs.0.1 value

uavcan.time.TAIInfo.0.1 info

Diving deeper we see:

reg.drone.physics.time.TAI64VarTs.0.1:

19 bytes

uavcan.time.SynchronizedTimestamp.1.0 timestamp

TAI64Var.0.1 value

yes, another SynchonizedTimestamp! and what is this timestamp timestamping? A timestamp. You’ve got to see the funny side of this.

Looking into TAI64Var.0.1 we see:

TAI64Var.0.1:

12 bytes

TAI64.0.1 value

float32 error_variance

so there we have it. A timestamped timestamp with a 32 bit variance. What the heck does that even mean?

Completing the timestamp type tree we have:

TAI64.0.1:

8 bytes

int64 tai64n

so finally we have the 64 bit time. It still hasn’t given me the timestamp that I actually want though. I want the iTOW. That value in milliseconds tells me about the actual GNSS fix epochs. Tracking that timestamp in its multiple of 100ms or 200ms is what really gives you the time info you want from a GNSS. Can I get it from the huge tree of timestamps in DS-015? Maybe. I’m not sure yet if its possible.

Now on to the heartbeat. This is where we finally know what the status is. Note that the GNSS top level docs suggest this is sent at 1Hz. There is no way we can do that, as it contains information we need before we can fuse the other data into the EKF.

A heartbeat is a reg.drone.service.gnss.Heartbeat

reg.drone.service.gnss.Heartbeat:

25 bytes

reg.drone.service.common.Heartbeat.0.1 heartbeat

Sources.0.1 sources

DilutionOfPrecision.0.1 dop

uint8 num_visible_satellites

uint8 num_used_satellites

bool fix

bool rtk_fix

here we finally find out the fix status. But we can’t differentiate between 2D, 3D, 3D+SBAS, RTK-Float and RTK-Fixed, which are all distinct levels of fix and are critical for users and log analysis. Instead we get just 2 bits (presumably to keep the protocol compact?).

We do however get both the number of used and number of visible satellites. That is somewhat handy, but is a bit tricky as “used” has multiple meanings in the GNSS world.

Looking deeper we have:

reg.drone.service.common.Heartbeat.0.1:

2 bytes

Readiness.0.1 readiness

uavcan.node.Health.1.0 health

which is made up of:

Readiness.0.1:

1 byte

truncated uint2 value

uavcan.node.Health.1.0:

1 byte

uint2

these are all sealed btw. If 2 bits ain’t enough then you can’t grow it.

Now on to the sources:

Sources.0.1:

48 bits, 6 bytes

bool gps_l1

bool gps_l2

bool gps_l5

bool glonass_l1

bool glonass_l2

bool glonass_l3

bool galileo_e1

bool galileo_e5a

bool galileo_e5b

bool galileo_e6

bool beidou_b1

bool beidou_b2

void5

bool sbas

bool gbas

bool rtk_base

void3

bool imu

bool visual_odometry

bool dead_reckoning

bool uwb

void4

bool magnetic_compass

bool gyro_compass

bool other_compass

void14

so we have lots of bits (using 56 bits) telling us exactly which satellite signals we’re receiving, but not information on what type of RTK fix we have.

What is the “imu”, “visual_odomotry”, “dead_reckoning” and “uwb” doing in there? Does someone really imagine you’ll be encoding your uwb sensors on UAVCAN using this GNSS service? Why??

Diving deeper we have the DOPs:

DilutionOfPrecision.0.1:

14 bytes

float16 geometric

float16 position

float16 horizontal

float16 vertical

float16 time

float16 northing

float16 easting

that is far more DOP values than we need. The DOPs are mostly there to keep users who are used them them happy. They want 1, or at most 2 values. We don’t do fusion with these as they are not a measure of accuracy. Sending 6 of them is silly.

Now onto sensor_status:

sensor_status: reg.drone.service.sensor.Status

reg.drone.service.sensor.Status:

12 bytes

uavcan.si.unit.duration.Scalar.1.0 data_validity_period

uint32 error_count

uavcan.si.unit.temperature.Scalar.1.0 sensor_temperature

yep, we have the temperature of the GNSS in there, along with an “error_count”. What sort of error? I have no idea. The doc says it is implementation-dependent.

The types in the above are:

uavcan.si.unit.duration.Scalar.1.0:

4 bytes

float32

uavcan.si.unit.temperature.Scalar.1.0:

4 bytes

float32

quite what you are supposed to do with the “data_validity_period” from a GNSS I have no idea.

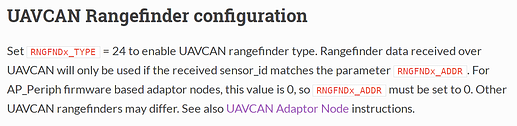

Ok, we’re done with what is needed for a GNSS that doesn’t do yaw, but as I mentioned, yaw from GNSS is one of the killer features attracting users to new products, so how would that be handled?

We get this:

# Sensors that are able to estimate orientation (e.g., those equipped with IMU, VIO, multi-antenna RTK, etc.)

# should also publish the following in addition to the above:

#

# PUBLISHED SUBJECT NAME SUBJECT TYPE TYP. RATE [Hz]

# kinematics reg.drone.physics.kinematics.geodetic.StateVarTs 1...100

so, our GNSS doing moving baseline RTK for yaw needs to publish reg.drone.physics.kinematics.geodetic.StateVarTs, presumably at the same rate as the above. For ArduPilot we fuse the GPS yaw in the same measurement step as the GPS position and velocity, so we’d like it at the same rate. We could split that out to a separate fusion step, but given yaw is just a single float, why not send it at the same time?

Well, we could, but in DS-015 it takes us 111 bytes to send that yaw. Hold onto your hat while I dive deep into how it is encoded.

reg.drone.physics.kinematics.geodetic.StateVarTs:

111 bytes

uavcan.time.SynchronizedTimestamp.1.0 timestamp

StateVar.0.1 value

another SynchronizedTimestamp. Why? Because more timestamps is good timestamps I expect.

Now into the value:

StateVar.0.1:

104 bytes

PoseVar.0.1 pose

reg.drone.physics.kinematics.cartesian.TwistVar.0.1 twist

yep, our yaw gets encoded as a pose and a twist. I’ll give you all of that in one big lump now, just so I’m not spending all day writing this post. Take a deep breath:

StateVar.0.1:

104 bytes

PoseVar.0.1 pose

reg.drone.physics.kinematics.cartesian.TwistVar.0.1 twist

reg.drone.physics.kinematics.cartesian.TwistVar.0.1:

66 bytes

Twist.0.1 value

float16[21] covariance_urt

Twist.0.1:

24 bytes

uavcan.si.unit.velocity.Vector3.1.0 linear

uavcan.si.unit.angular_velocity.Vector3.1.0 angular

PoseVar.0.1:

82 bytes

Pose.0.1 value

float16[21] covariance_urt

Pose.0.1:

40 bytes

Point.0.1 position

uavcan.si.unit.angle.Quaternion.1.0 orientation

uavcan.si.unit.angular_velocity.Vector3.1.0:

12 bytes

float32[3]

uavcan.si.unit.velocity.Vector3.1.0:

12 bytes

float32[3]

Point.0.1:

24 bytes

float64 latitude # [radian]

float64 longitude # [radian]

uavcan.si.unit.length.WideScalar.1.0 altitude

uavcan.si.unit.angle.Quaternion.1.0:

16 bytes

float32[4]

phew! That simple yaw has cost us 111 bytes, including 42 covariance variables, some linear and angular velocities, our latitude and longitude (again!!) and even a 2nd copy of our altitude, all precise enough for quantum physics. Then finally the yaw itself is encoded as a 16 byte quaternion, just to make it maximally inconvenient.

Conclusion

if you’ve managed to get this far then congratulations. If someone would like to check my work then please do. Diving through the standard to work out what actually goes into a service is a tricky task in UAVCAN v1, and it is very possible I’ve missed a turn or two.

The overall message should be pretty clear however. The idiom of DS-015 (and to a pretty large degree UAVCAN v1) is “abstraction to ridiculous degrees”. It manages to encode a simple 56 byte structure into a 243 byte monster, spread across multiple messages, with piles of duplication.

We’re already running low on bandwidth with v0 at 1MBit. When we switch to v1 we will for quite a long time be stuck at 1MBit as there will be some node on the bus that can’t do higher rates. So keeping the message set compact is essential. Even when the day comes that everyone has FDCAN the proposed DS-015 GNSS will swallow up most of that new bandwidth. It will also swallow a huge pile of flash, as the structure of DS-015 and of v1 means massively deep nesting of parsing functions. So expect the expansion in bandwidth to come along with an equally (or perhaps higher?) expansion in flash cost.

The DS-015 “standard” should be burnt. It is an abomination.