A minute of weird questions and low-profile self doubt.

I would like everyone who cares about bit layouts and also @scottdixon and @kjetilkjeka (even if they don’t) to squint at the following diagrams and see if one of them seems more sensible than the other.

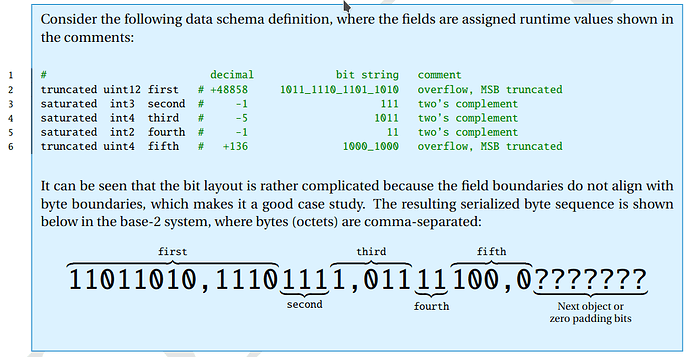

This diagram is titled little endian:

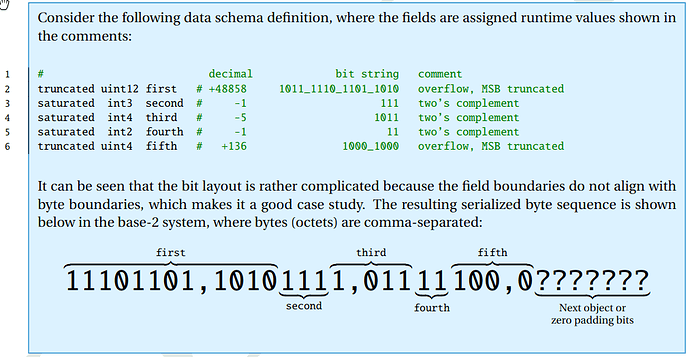

This one is big endian:

One can see here that the big endian format preserves bit ordering continuity across the byte boundary, making the serialization and deserialization logic somewhat simpler in the general case. The advantage of the little-endian format is that while the general case is more complex (notice how the nibble 11102 is thrown all the way into the next byte while it’s supposed to be the most significant one), it is natively supported by most of the modern microarchitectures (those that support both big and little endian are still likely to run an OS or other software compiled for little-endian so it doesn’t really help), so byte-aligned primitives can be serialized by a trivial memcpy, no bit twiddling needed.

Preservation of the bit ordering continuity allows one to encode/decode serialized representations by shifting things in/out a large arbitrary-precision integer without intermediate byte-swapping per value which we’re forced to do in pyuavcan, for example:

https://github.com/UAVCAN/pyuavcan/blob/master/uavcan/transport.py#L94-L109